'Realtime' Generative AI Physical Performance

When DJing, I'm always tweaking physical audio controls to add expressiveness to the mix. Generative video with Stable Diffusion doesn't have a way to add expressiveness in realtime since it's slow to render, and I didn't want to draw keyframes on a screen.

I was curious how I could introduce a live performance aspect to the AI art style that I'd been developing. I decided to see if I could perform 'blind' just to the music track, and then input the performance data into Stable Diffusion to have it react in sync during the render process.

I had already made the music for the video (a drum & bass DJ mix) and added the audio tracks to Ableton Live.

With a Korg NTS1 connected, I played a track in Ableton. While playing, I adjusted the knobs on the NTS1, imagining how it might affect parameters in the AI render that would happen later. It was hard to know how the performance would affect the visuals, so there was an aspect of uncertainty in that 'blind' performance without instant visual feedback.

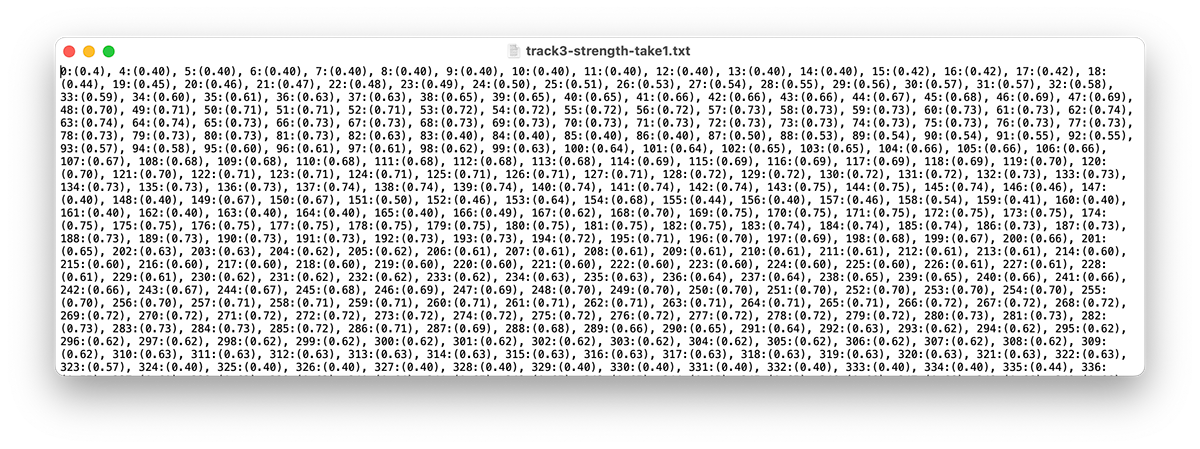

The performance was sent from Ableton Live to a Python script using an Max 4 Live instrument that could transmit OSC data. The Python script would receive values from 0-1 and save them out as Stable Diffusion animation schedules.

The process was repeated several times for each track to control different parameters of the diffusion process. Diffusion strength would affect how much would change per frame. Zoom, translation, angle would control camera motion. Other esoteric parameters that could be animated like Sampler, Checkpoint would give additional creative options. All the animation paramters were then input into Stable Diffusion and a render could begin.

The AI render process was when I could finally see what the performance on the Korg NTS1 would do to the AI generated image - which was unpredictable to say the least. Sometimes the image would be affected too much and go nuts, sometimes it wouldn't do anything, sometimes it would create some wild hallucinations.

In the example below the initial rotation was too fast, the zoom was faster than i wanted, and the image was changing too little.

Sometimes a performance would look great until right near the end, and then go all wonky - which was a bummer since renders took hours to complete. This process required going back and re-doing many performances - learning what values worked well for each song. The Python script was also useful to constrain values for different parameters.

I figured out some things as I worked through the songs in the DJ mix, most crucially that a 30 minute video would be about 30,000 frames of animation - a pretty huge amount of frames to generate - and a lot of time to wait for rendering to finish!

All in all, the experiment was a success and the video is a brain-melting visual journey. It's pretty intense, which goes well with the high energy drum & bass mix!

For the next project I'll likely try a shorter song so I can refine and iterate on the performance workflows, and have more time crafting a story and refining smaller moments.