CHALLENGE

Driving in a connected world can be dangerous. Taking your eyes off the road to interact with car interfaces is a risk. Until fully automated self-driving cars are the norm, alternative ways of interaction are needed to foster safety on the roads.

Current Tesla displays require user touch and attention to operate.

A rethinking of the interface interaction would incorporate a voice activated, intelligent assistant helping users drive more safely.

Predictive features suggest actions, while built-in sensors with a gestural language help users have safer interactions.

A rethinking of the interface interaction would incorporate a voice activated, intelligent assistant helping users drive more safely.

Predictive features suggest actions, while built-in sensors with a gestural language help users have safer interactions.

PAIN POINTS & TOUCH POINTS

After some research, I identified the most common pain points of the driver; later I thought out new predictive, voice and gestural features that would all work together to simplify user input.

THREE LAYERS OF INTERACTIONS FOSTER SAFER DRIVING PRACTICES

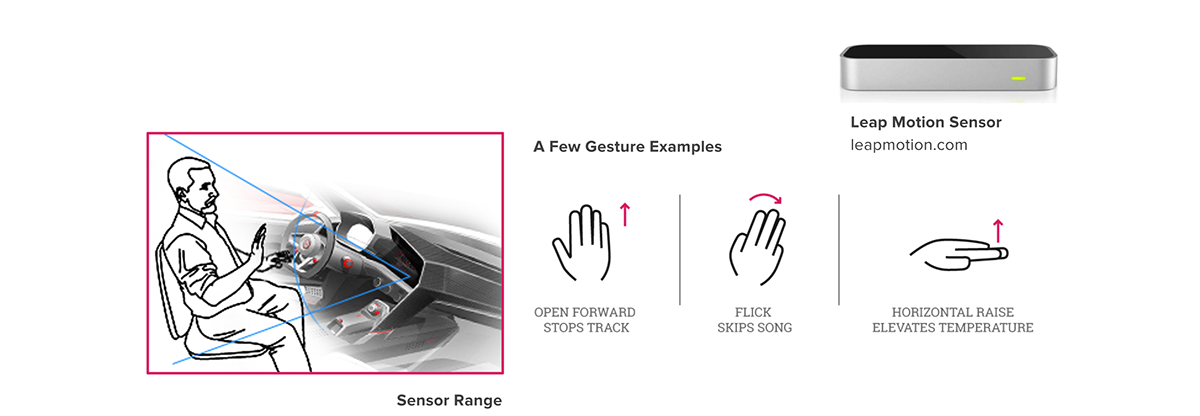

1- SENSORS WITH GESTURAL LANGUAGE

Sensor technology alike to Leap Motion would interpret a series of natural gestural commands, like open hand moving forward to pause a track, flick of the hand to skip a song, or raise horizontal hand to turn the AC temperature up.

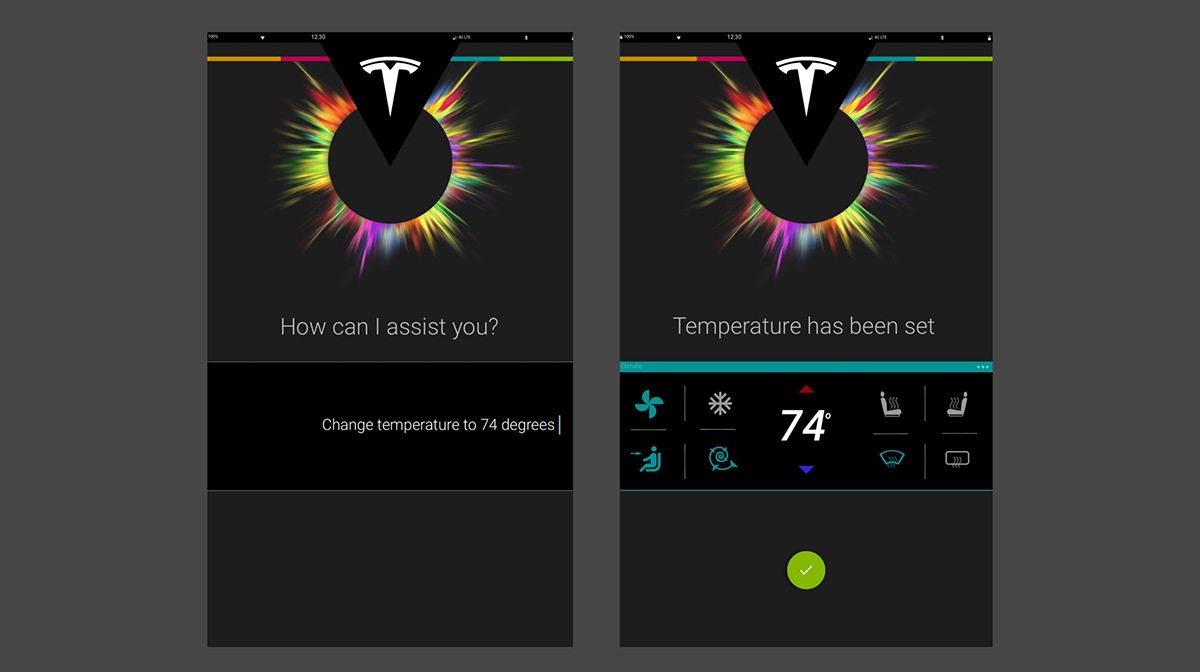

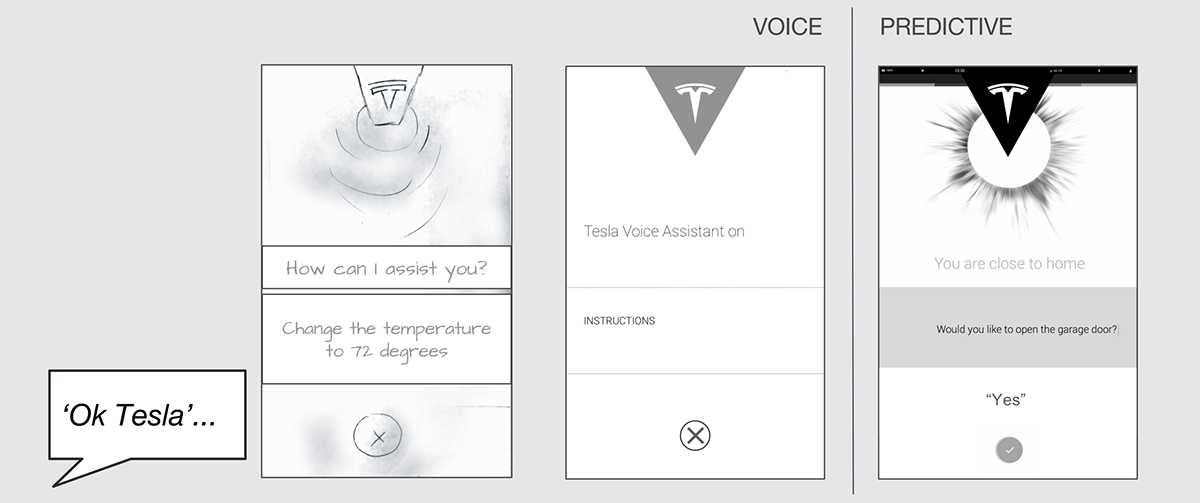

2- VOICE COMMAND AND SOUND NOTIFICATIONS

A voice activated command system can simplify the challenge of accessing car features while driving safely. Always listening and activated by a key phrase.

3- SMART, PREDICTIVE AND SAFE INTERACTIONS

Predictive features allow drivers to maintain focus on the road.

User needs are anticipated by the AI based on patterns, geolocation, and user preferences. Driver is presented with a series of features that require minimum input entry. Spoken notifications and user voice responses facilitate confirmation or rejection of CTA’s.

I.E: When user is arriving home, the assistant would ask about opening the garage door based on geolocation data.

I.E: When user is arriving home, the assistant would ask about opening the garage door based on geolocation data.

VISUAL EXPLORATION