tmac - total material appearance capture

tmac is a new generation of PBR (Physically Based Rendering) surface texture capture device. It accommodates flat samples of fabric, suede, wood, wallpaper of up to 1,5 x 1,0 meter. Capture resulting in SVBRDF maps (albedo, normal, specular, roughness etc.) that can be fed to usual 3D application.

Featuring ScanTray - samples handling in/out tray with an area of whopping 1x1 meter fully back underlit BlackShine surface and family of SamplesAttach accessories. Samples are captured with an industry leading 3-step-LightSimulation - a Spotlight capture, Diffused light capture and Underlight capture handled by eight original Octopus design arms.

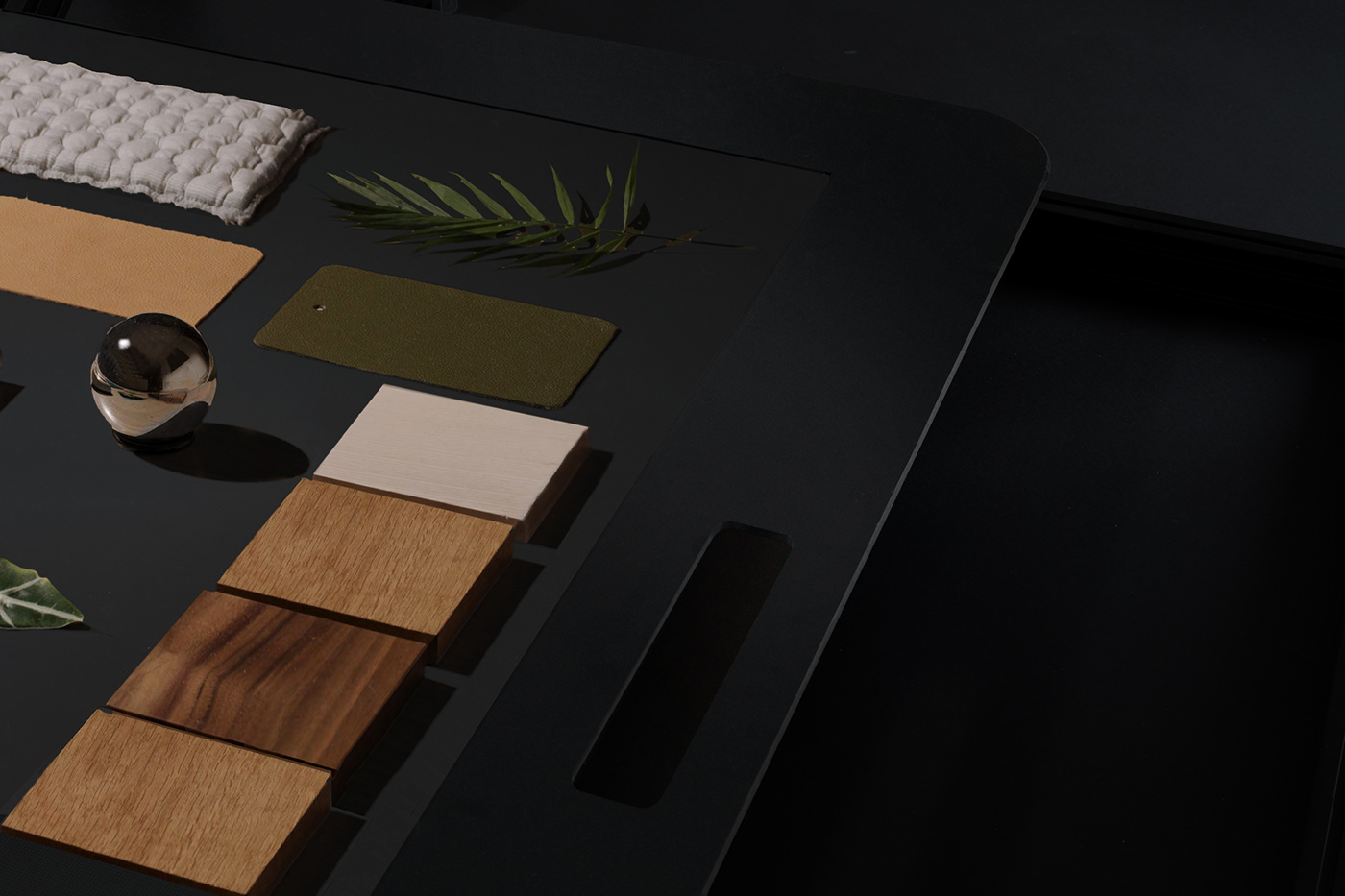

Samples like fabric, wood, wallpaper, suede, leather are inserted inside and device creates a digital twin copy of the surface that is used in virtual worlds, 3d applications, visualization.

Automated capture completes in 40 seconds saving DigitalTwin of almost any material. tmac is based on photommetric stereo featuring 120°C heat-resistant high-contrast MidOpt SpotlightPolarizer with original click in/out design and 400°C heat resistant special 99% light-transmission AntiRelection industrial AR70 glass.

Mode Maison

tmac provides versatility and top cgi industry companies stated outstanding performance and quality. tmac provides wide range of use, area of scan and simply put, great visual quality.

tmac being a steppping stone, bringing to the world truly affordable large scan area SV-BRDF capture device, we are already far with assembly of full large area BTF device - that we would be talking on Siggraph about - together with new Neural / NERF BRDF representations, and the first super affordable A4 SVBRDF scanner for masses.

Mode Maison is currently fundraising.

Super brain and genius thoughts: Steven Gay

Investments, Fundraise: John Finger, Nick Godfrey

Rendering and Materials: Jakub Čech, Marcin Mackiewicz

3D modeling: Eugene & Teammax

Engineering, Design, Direction: Jakub Čech

Scientists in charge: Jiří Filip, Radomír Vávra

Photography, Website: Jakub Čech

Investments, Fundraise: John Finger, Nick Godfrey

Rendering and Materials: Jakub Čech, Marcin Mackiewicz

3D modeling: Eugene & Teammax

Engineering, Design, Direction: Jakub Čech

Scientists in charge: Jiří Filip, Radomír Vávra

Photography, Website: Jakub Čech

Story 1/2

Fours years ago I joined Mode Maison and built pipeline for what we call future of digital retail. Fully digitizing brands to its core, both 3d model and 2d surface, allows us to show them in the novel, nuanced and photorealistic way. Workflow assembled from flying capture team on location, using state of art 3d surface capture methods including structured light, photogrammetry for 3d model acquisition. Getting samples of fabrics shipped and digitizing them in a manual way of cross polarized photommetric stereo.

Great - now we do have geometry and surface data. 3d modelers go and clean up the data, follows the protocol - including every displaced stitch, wrinkle versioning for different materials stiffness, universal UV mapping. Material artist takes the scanned maps, creates a material and tweaks to reference image till matching, scatters millions of hairs with universal predefined scatterer. Awesome.

After years of making, putting both to our Nike-like white high key studio, watching the screen with goosebumps, is this going to be never before seen most realistic, nuanced rendering ever?

This is the story we would like to tell, a story rose-glasses version of creating the holy grail of digital twin of a geometry, a digital twin of a surface. Having these two finally perfectly cleaned and matched so we can use them in any lighting scenario. That is how reality works - is it not? This is not how (current) CGI works.

My deer colleague Marcin spent countless hours matching maps of a surface to the reference. Creating most amazing cgi materials I have ever seen. Then to see how we apply them to a sofa and these materials break because of a different lighting. Do we adjust materials? Is the lighting "bad"?

I have spent ages manually scanning hundreds of surfaces. Going around with cross polarized light, taking 80 images for each material. Move to next position, rotate filter, snap, bring diffused panel on wheels and put it in many positions, snap.

This is doable - with selected amount of brands to be digitized, state of art team of artists and technicians. Is this scalable? Absolutely not. What I am talking about is the future of digital retail where creativity is endless. Brand is digitized once, both in 3d geometry and 2d surface, and these digital twins works exactly like reality - and can produce exactly same beautiful nuanced and uncanny valley overcoming renderings.

Story 2/2

After years I came down to the reason of why it took weeks to make one image before. Same reason why brands producing flooring, wall coverings, plasters, upholstery, furniture manufacturers have hard time throwing away photography and making everything in cgi. There are no tools for digital twins.

What is out there is a scientific research on how surfaces react to incoming light and bounces it around in a microscopic way. CGI is composed of material, lighting, geometry. I would be courageous to say that 3d geometry is fine, ignoring for a moment the fact that we need to simulate different physical material behavior if its a thick leather versus thin linen. Current BRDF representation of materials is physcally based too - with Disney PBR taking over as a first ever standard. Lighting of cornell box is proven to be almost exactly same as Cornell box photo. Where is the issue then?

The problem is not in these assumptions or equations to be wrong or non scientifically driven. It all makes sense and is build on top decades of research. The problem is in inputs.

The inputs for BRDF material representation are maps like albedo, normal, specularity, gloss, sss.... there are tens of them. Each possibly coupled with a value of importance. Coupling it with falloff needed to define fabrics behavior from different elevations. Thousands of options. There is not a single device on Earth that would capture appearance in such way and was able to set these parameters like a material artist does. And even after that, the material setup could be, and many times is a miss - and breaks in different lighting (talking about super nuanced photorealistic rendering).

Another problematic area is Global Illumination where we need correction models for colors - but about that later.

I shifted in recent years to be someone else (other than 3d artist). To work on and build the tools I have always dreamed about and was finally able to execute my vision in a nuanced photorealistic manner within days, not weeks. Be able to create true digital twins of surfaces, geometries and possibly simulate in between. Come up with novel material models that does not break, does not need artistic adjustments. NERF BRDF, AI material representation, correction models, Neural BRDF, BTF. True one to one copy.

I would love to present tmac (total material appearance capture) that stands a first stepping stone of getting there.

Some renderings made with tmac surfaces

Thank you!