Why tactile interfaces?

A sense of touch is a combination of sensations evoked by stimulating the skin and it is crucial for our interactions with the world around us. For example, when we roll a pencil in our fingers, we can very quickly and precisely re-adjust the 3D positions and grasping forces of our fingers by relying entirely on tactile sensation. It also allows us to understand fine object properties where vision fails: e.g. textures and tiny surface variations can be accurately detected by touch. Touch has also strong emotional impact: running a finger into a splinter, touching a cat's fur, or immersing fingers into unknown sticky substance all bring intense, though very different, emotional responses.

In several projects listed below I investigate how we can use sense of touch as an additional channel of communication between human and digital devices, in particular handheld mobile devices that has become so prevalent nowadays. My work on tactile user interfaces is an ongoing research, therefore, more projects might be added in the future.

TouchEngine™ tactile platform

TouchEngine is a new haptic actuator that we created for designing and implementing a wide range of tactile user interfaces. It is particularly suited for implementing tactile feedback in handheld, mobile electronic devices. Indeed, currently TouchEngine is the only haptic actuator that has all of the following highly desired qualities:

- small, miniature size;

- lightweight;

- low voltage and power consuption ;

- extremely low latency, accelerations up to 5G can produced;

- can produce a wide variety of tactile patterns with different frequency and amplitude;

- can be easily customized and retrofitted to devices of various sizes and forms;

- relatively inexpensive in mass production.

- lightweight;

- low voltage and power consuption ;

- extremely low latency, accelerations up to 5G can produced;

- can produce a wide variety of tactile patterns with different frequency and amplitude;

- can be easily customized and retrofitted to devices of various sizes and forms;

- relatively inexpensive in mass production.

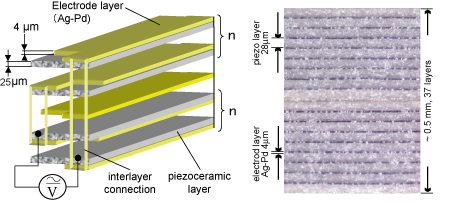

The TouchEngine actuator is constructed as a sandwich of 0.28 micrometer piezoceramic film layers with adhesive electrodes in between, resulting in a 0.5mm beam. The piezocermic material works as a solid state "muscle" by either shrinking or expanding depending on the polarity of the applied voltage. The material on the top has an opposite polarity to that on the bottom, so when a signal is applied the whole structure bends. This configuration is often called a "bending motor" actuator.

Piezoelectric bending motor actuator.

The bending motors that currently exist usually consist of only two layers (i.e. biomorphs) and require a at least of 40V peak-topeak for producing sufficient force, making them unsuitable for the mobile devices. We can observe, however, that for voltage V and thickness T, the displacement D and force F for bending motors are:

D = a1V /T2 and F = a2TV,

where a1, a2 are coefficients. Therefore, by decreasing thickness T we can achieve the same displacement with a lower voltage. This, however, also decreases the force F, we can compensate for this by layering several thin piezoceramic layers together. Thus, we reduce the voltage required for maximum displacement to 8-10V peak-to-peak.

Left: Structure of the TouchEngine multilayer haptic actuator.

Right: Microscopic view of the actuator with n=9. You can also see an

image with much higher magnification here.

Right: Microscopic view of the actuator with n=9. You can also see an

image with much higher magnification here.

The resulting actuator has unique properties. It is thin, small, it can be operated from a battery and produced in different sizes and number of layers. We implemented a range of tactile user interfaces with TouchEngine that have been demonstrated to public and presented in detailes at UIST 2002 [PDF], CHI 2002 [PDF] and SIGGRAPH 2002 [PDF] conferences.

The actuator has been also used in a number of products that incorporated tactile user interfaces and were released on the market by Sony Corporation.

We embedded a tactile feedback apparatus in a Sony PDA touch screen

and enhanced its basic GUI elements with tactile feedback.

and enhanced its basic GUI elements with tactile feedback.

Tactile Interfaces for Small Touch Screens

Touch screens have become common in mobile devices, such as mobile phones, digital video cameras, high-end remote controls and so on. Despite thier popularity one important challenge remains: graphical buttons cannot provide the same level of haptic response as physical switches. Without haptics, the user can only rely on audio and visual feedback, which breaks the metaphor of directness in touch screen interaction.

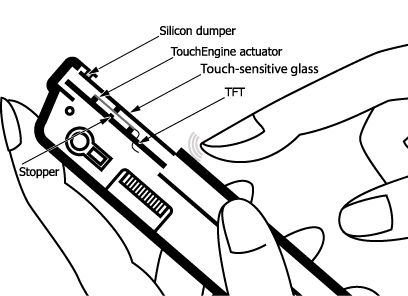

We present a haptic, tactile interface design for small touch screens used in mobile devices. We embedded four custom-designed TouchEngine actuators in Sonys Clie PDA touch screen (see figure above). Actuators were placed at the corners of the touch screen between the TFT display and the touch-sensitive glass plate. The glass plate is larger than the display; hence, the actuators are not visible.

The important design decisions were as follows (see also drawing below):

- Actuation of the touch screen. When a signal is applied, actuators bend rapidly, pushing the touch-sensitive glass plate towards the user's finger. Actuators are very thin, hence we could embed them inside the touch screen. Therefore, only the lightweight touch-sensitive glass is actuated, not the entire unit which includes a heavy TFT display.

- Localized tactile feedback. Vibration of touch screen glass produces tactile sensations only to the touching finger, not to the hand holding the device. To prevent the entire device from vibrating, a soft silicon damper is installed between the glass panel and frame ridges. It allows the glass panel to move when pushed by the actuators while cushioning the impact on the device frame.

- Small high-speed displacement. Although the displacement of actuators is small (about 0.05 mm), its fast acceleration produces a very sharp tactile sensation.

- Silent operation. Large audible noise defeats the purpose of tactile display. Noise can be sharply reduced by a) wave shape design and b) mechanical design, i.e. by preventing loose parts from rattling when the actuators move.

- Reliability. Bending the fragile ceramic actuators more then 0.1 mm by pushing on the touch screen glass can damage them. Therefore, a stopper is placed under the actuators to prevent their excessive bending.

- Localized tactile feedback. Vibration of touch screen glass produces tactile sensations only to the touching finger, not to the hand holding the device. To prevent the entire device from vibrating, a soft silicon damper is installed between the glass panel and frame ridges. It allows the glass panel to move when pushed by the actuators while cushioning the impact on the device frame.

- Small high-speed displacement. Although the displacement of actuators is small (about 0.05 mm), its fast acceleration produces a very sharp tactile sensation.

- Silent operation. Large audible noise defeats the purpose of tactile display. Noise can be sharply reduced by a) wave shape design and b) mechanical design, i.e. by preventing loose parts from rattling when the actuators move.

- Reliability. Bending the fragile ceramic actuators more then 0.1 mm by pushing on the touch screen glass can damage them. Therefore, a stopper is placed under the actuators to prevent their excessive bending.

Structure of tactile feedback apparatus embedded into the Sony Clie PDA.

There are two basic directions for exploration of tactile feedback and touchscreen GUI interaction. First, we can investigate application specific tactile interfaces, e.g. enhancing drawing applications with tactile feedback. Second, we can investigate combination of tactile feedback with general-purpose GUI elements. Since our immediate objective is to introduce tactile feedback into generic mobile devices, we have chosen the latter.

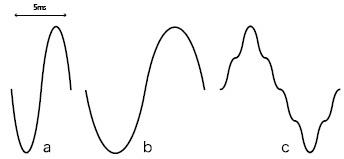

Interaction with touch screens is based on gestures. The gesture starts when the user touches the screen with a finger (or pen) and finishes when the user lifts it up from the screen. The figure below presents a basic gesture structure for touch screen interaction. Each component of the gesture can be augmented with a distinct tactile feeling and, therefore, we can classify all tactile feedback into five types: tactile feedback provided when the user starts a gesture by touching a GUI element on touch screen (T1), when the user then either drags (T2) or holds (T3) her pen/finger, and, finally, when the user lifts it off either inside (T4) or outside (T5) the GUI widget.

Structure of touch screen gesture.

We augmented basic GUI elements with tactile feedback, including several variations of buttons, scroll bars and menus. The tactile feedback wavepatterns that were used are shown below. These particular instances of tactile feedback were made primarily for prototyping and evaluation; certainly other tactile feelings might be more effective.

Tactile waveshapes that we used with GUI elements.

We evaluated our prototypes in several informal usability studies, asking 10 colleagues to test our interfaces in audio, tactile and no feedback conditions. Tactile feedback was exceptionally well-received by our users who remarked how similar it felt to an mechanical switch. We also observed that tactile feedback was most effective when the GUI widgets needed to be held down or dragged on the screen. The combination of continuous gestures and tactile feedback resulted in a strong feeling of physicality in interaction.

We also found tactile feedback effective in interacting with small GUI elements, as it provided fast and reliable feedback even when GUI element is obscured by a finger.

The design of our interface is presented in much more details in UIST 2003 paper [PDF]. A number of products that incorporated our tactile interface were released on the market by Sony, please see product descriptions for more details.

We embedded a tactile feedback apparatus in a Sony PDA touch screen

and enhanced its basic GUI elements with tactile feedback

and enhanced its basic GUI elements with tactile feedback

Tactile Feedback for Pen Computing

Until recently pen-based devices were used mostly by prefessionals: engineers, artists and architects. Recently, however, the variety of devices that support pen input have grown with introduction of PDAs and tablet PCs. With further improvement of technology, the popularity of pen-based computing will continue to grow in the future.

We seek to improve the experience of using pen devices by augmenting them with tactile feedback. In our initial experiments we embedded TouchEngine tactile actuators directly into pen and provided feedback directly to the user finger (see our short SIGGRAPH presentation for more details [PDF]). The problem with this design is that the pen need wires to provide power to the actuators. Wireless solution would require embedding batteries and wireless receiver into pen which would increases its size and weight making it more significantly more difficult to use.

Therefore, in our second prototype we embedded actuators directly into the screen, similarly to small screen tactile user interfaces presented above.

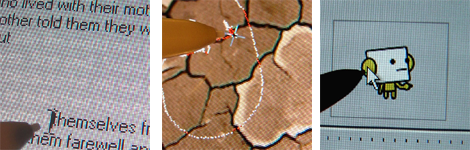

Four TouchEngine actuators were embedded into a stationary 15 inch LCD monitor of Sony VAIO LX personal computer (figure above) that hasd Wacom pen-input technology built into the display. We then enhanced 2D pen interaction interfaces with tactile feedback and explored three strategies for tactile interaction with pen computers: tactile GUI, tactile information perception, and tactile feedback for active input.

Left: text selection was enhanced with tactile feedback;

Middle: feeling visual textures and photographs;

Right: feeling chartacter motion.

Middle: feeling visual textures and photographs;

Right: feeling chartacter motion.

GUI interaction. We added tactile feedback to GUI elements, such as buttons, so that they would "click" when pushed, sliders, that provided a short tactile impulse each time the user scrolled a line, and text, where selecting a character was enhanced with a tactile click (see figure below). In informal evaluations, the users were very positive about tactile feedback. We observed that users strongly preferred tactile feedback when it was combined with an active gesture, e.g. dragging sliders or selecting text.

Feeling data. Tactile display may allow to feel visual data similarly to how we can feel physical textures in the real world, e.g. feeling cracks in the photograph of a desert (see figure above). We also added tactile feedback to character animation, so that the user could feel the character motion; similar to how we can feel a pulse beating in a hand.

We found, that increasing the complexity of textures seemed to reduce the effect of haptic feedback and it even made interaction more confusing. We hypothesize that with complex visual textures the users could not easily correlate the image and tactile feedback, particularly when they draw quickly and the latency of pen input was evident. Thus, spatial and temporal correlation between visual and haptic feedback in dynamic gestures must be explored in more depth. Techniques to cope with lag are also important.

Tactile feedback for active pen input. We added tactile feedback for drawing operations by having a single tactile pulse produced each time the pen crossed a pixel (see figure below). The strength of the pulse was correlated with the pen pressure: the stronger pressure resulted in stronger tactile feedback. We also explored tactile feedback in vector style graphics manipulation, such as manipulating control points of Bezier curve. Indeed, in complex drawings the density of control points is often very high and tactile feedback might be useful for improving selection precision. Finally, we combined tactile feedback with constraints such as grids and alignments. In one example, the user would feel the underlying grid while manipulating a line's endpoint in snap-to-grid mode.

Active input enhanced with tactile feedback was most appreciated by the users. In particular, haptic constraints were met with delight, as they felt similar to a pen hitting a physical groove or guide.

We found, that increasing the complexity of textures seemed to reduce the effect of haptic feedback and it even made interaction more confusing. We hypothesize that with complex visual textures the users could not easily correlate the image and tactile feedback, particularly when they draw quickly and the latency of pen input was evident. Thus, spatial and temporal correlation between visual and haptic feedback in dynamic gestures must be explored in more depth. Techniques to cope with lag are also important.

Tactile feedback for active pen input. We added tactile feedback for drawing operations by having a single tactile pulse produced each time the pen crossed a pixel (see figure below). The strength of the pulse was correlated with the pen pressure: the stronger pressure resulted in stronger tactile feedback. We also explored tactile feedback in vector style graphics manipulation, such as manipulating control points of Bezier curve. Indeed, in complex drawings the density of control points is often very high and tactile feedback might be useful for improving selection precision. Finally, we combined tactile feedback with constraints such as grids and alignments. In one example, the user would feel the underlying grid while manipulating a line's endpoint in snap-to-grid mode.

Active input enhanced with tactile feedback was most appreciated by the users. In particular, haptic constraints were met with delight, as they felt similar to a pen hitting a physical groove or guide.

Left: adding tactile feedback to drawing operation allows to feel pixels;

Middle: Tactile feedback was added to control handles;

Right: The user could feel the when manipulating vector drawings.

Middle: Tactile feedback was added to control handles;

Right: The user could feel the when manipulating vector drawings.

After refinement, we conducted experimental studies that investigated the effect of tactile feedback in pen tapping and drawing tasks. We designed experiments according to the ISO 9241 Part 9. Two tasks were evaluated. First, in the tapping task, the subjects repeatedly tapped on strips of varying width separated by different distances. Second, in the drawing/dragging task, the subjects drew a line with a pen from a starting point to the target stripe of varying with.

We found that tactile feedback did not improve the tapping performance. We observed that in tapping task subjects often hit the target rather then touch it, hence the contact between the pen and screen was very short. Hence subjects sometimes failed to even notice tactile feedback.

Tactile feedback improved user performance in the drawing task. There was significant interaction between feedback, amplitude and target width; the effect of feedback was strongest for smaller targets. Assuming that the dragging falls under Fitt’s law, the bandwidth could be estimated as 5.9 and 4.8 bit/sec for tactile and no tactile conditions respectively (see figure below). As the difficulty of task increases the benefit of tactile feedback also increases.

Scatter plot of time versus Fitt's index of difficulty

with linear regression lines for different tactile conditions

with linear regression lines for different tactile conditions

The experimental results suggest that the combination of active gesture with tactile feedback yields significantly better results then for the simple tapping task. This is also supported by the survey of the users' subjective preferences. We believe that these tasks and conditions approximate a bulk of the interactions in pen computing, such as selecting targets of various sizes separated by differing distances, dragging icons and scroll bar handles, etc. Therefore, the results of the experiments can be generalized to wider range of traditional GUI applications.

Publications

Poupyrev, I., M. Okabe, and S. Maruyama. Haptic Feedback for Pen Computing: Directions and Strategies. Proceedings of CHI'2004. 2004: ACM: pp. 1309-1310 [PDF].

Poupyrev, I., S. Maruyama, and J. Rekimoto. TouchEngine: A tactile display for handheld devices. Proceedings of CHI 2002, Extended Abstracts. 2002: ACM: pp. 644-645 [PDF]

Poupyrev, I. and S. Maruyama. Tactile interfaces for small touch screens. Proceedings of UIST. 2003: ACM: pp. 217-220 [PDF].

Poupyrev, I. and S. Maruyama. Drawing With Feeling: Designing Tactile Display for Pen. Proceedings of SIGGRAPH'2002, Technical Sketch. 2002: ACM: pp. 173 [PDF]

Poupyrev, I., S. Maruyama, and J. Rekimoto. Ambient Touch: Designing tactile interfaces for handheld devices. Proceedings of UIST'2002. 2002: ACM: pp. 51-60 [PDF]

[other publications ...]