Situation

Promethean, Inc. provides interactive education technology solutions. The company offers education software, interactive whiteboards, learner response systems, interactive tools, and classroom audio tools. Promethean's ActivProgress is an enterprise-level software solution that helps teachers, principals, and district administrators collect, track, manage and synthesize data about students. Teachers can analyze data about students' progress to better meet each child where they are in their learning and provide with them experiences that will propel them forward more efficiently. Administrators can use the data to help them allocate resources effectively, toward that same end of student achievement.

ActivProgress users can create assessments, administer or proctor them via the web, mobile devices, Promethean's Learner Response Systems (LRS), or via custom printable answer bubble sheets (which are then scanned back in). It offers a broad range of question and answer types that allow teachers to assess in many different ways. Alignment with state and Common Core standards facilitates tracking progress against those goals. Automatic grading is available for many of the traditional questions (like multiple choice and true/false, making it easy to generate robust reports with quantitative data about student performance.

But what happens when teachers want to assess using more qualitative means? How can the system take something like a student's execution of a paper mache volcano or an essay or a play performance, and allow these authentic, qualitative assessment and tracking of them to co-exist in a data ecosystem with true/false questions?

In common practice, for items like these, teachers often create and score with rubrics. Generally, rubrics are tables that have qualitative measures on one axis, levels of quality on the other, and identifying descriptors in the inner cells. Teachers create them in concert with their peers and with students using tools like Microsoft Office or sites like Rubistar at best.

In common practice, for items like these, teachers often create and score with rubrics. Generally, rubrics are tables that have qualitative measures on one axis, levels of quality on the other, and identifying descriptors in the inner cells. Teachers create them in concert with their peers and with students using tools like Microsoft Office or sites like Rubistar at best.

Task

Layering rubric functionality into numerous workflows within ActivProgress. Teachers and administrators needed to be able to use our brand new tools to:

* Find, view, edit, and share relevant existing rubrics

* Create new rubrics of varying levels of complexity, with option to weight criteria and align them to standards, ability to share and co-create with peers

* Assign rubrics for use with particular test questions in test creation flow

* Grade those questions or items for each child using interactive versions of their rubrics

* View student performance on questions graded with rubrics alongside other quantitative data

Some of this functionality was embedded in existing UI's and some was completely new.

Action

Feature Landing Page: Finding, Viewing, Editing, & Sharing Rubrics

Screenshot from Axure prototype of "Rubrics Home/Landing" page, where teachers could go to browse or search for existing rubrics, evaluate their relevance; or choose to create a new rubric. It was important for quick-perusing users to be able to view the rubric itself, as well as any associated metadata, and make inferences about its relevance and quality based on who created it. To download the actual prototype, click here. To view and play with the actual prototype once downloaded, open the .zip file and open the "home.html" file with your browser.

Rubric Builder Workflow

Above all, I wanted to make sure that the workflow we created for teachers matched their mental model around how quality rubrics are made, and ensure that the tools got out of the way and facilitated deep thinking around content teachers were creating. The tool also had to be quick and dead simple; otherwise, teachers might not bother. I felt passionate about making the tool work well because of how it opened up using the software for all kinds of new uses. Before teachers had to limit their use or have an incomplete set of data, or worse, tailor assessment methods to fit only within quantitative means of measurement. With rubrics, teachers can give students more constructive feedback that helps them improve their work. They can create assessments that better meet the needs of English Learners, Special Ed students, kids with ADHD, kids that like to move and make things, and all of that could come to live within the big data ecosystem of ActivProgress. My prior work as a bilingual 3rd grade teacher and then an Art/ESL teacher in New York with Teach For America, and my masters work in action research, both underscored the importance of rubrics as part of a system of periodically assessing students in ways that are meaningful to them and contribute to learning and constructive partnership with their teacher.

On the whole, the product struggles with a tough dichotomy: whether to provide a modular set of tools for users without a workflow, which provides versatility for different needs and teaching styles; or to select most common use cases and simplify the use of the feature set for them by creating a series of more structured but less flexible workflows, with experiences such as wizards.

Based on competitive/comparative analyses, talking to teachers, personal experience as a teacher user, I worked with the product manager to suggest micro features, such as being able to cut/paste across multiple cells from a table in an Excel, Word document or other text editor, importing tables as the beginnings of rubrics, and contextual help, both in terms of using the software and also content-related professional development help (i.e. what actually makes a good rubric).

Based on competitive/comparative analyses, talking to teachers, personal experience as a teacher user, I worked with the product manager to suggest micro features, such as being able to cut/paste across multiple cells from a table in an Excel, Word document or other text editor, importing tables as the beginnings of rubrics, and contextual help, both in terms of using the software and also content-related professional development help (i.e. what actually makes a good rubric).

Given that we didn't have access to resources or to a lot of user research early on I was very lucky to be able to use my own subject matter expertise as a starting point for the first iterations. I was also lucky to have a High School English department chair and district/state curriculum coordinator as a 15-year mentor. He humored me by vetting my ideas and mental models, and made suggestions for further reading on best practice for creation of rubrics. I kept in mind that idealized best practice teacher behavior was likely not the norm, so I needed to create a system simple enough to make users want to use it, (like recycling) and also be sure that teachers could benefit from even more minimal levels of effort.

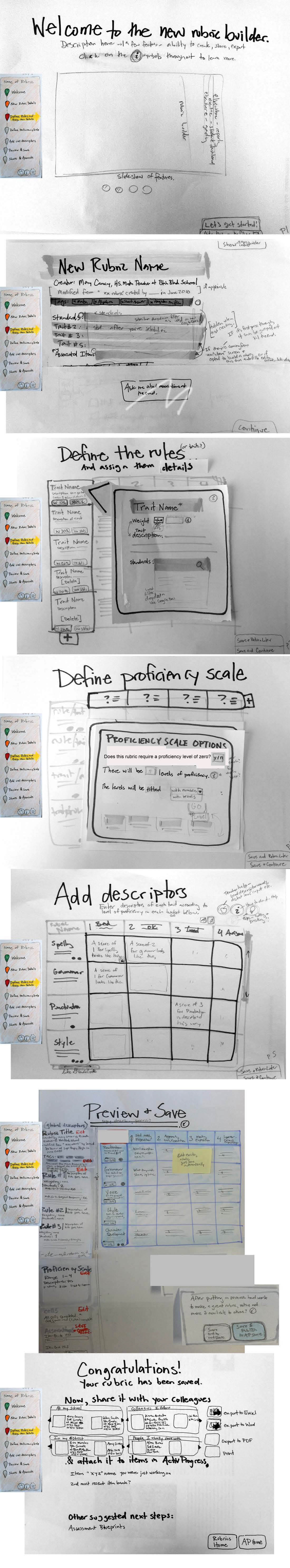

Next in my discovery phase, I actually created a rubric and made notes about the process: kept track to bring to top-of-mind the nuanced pain points in a user's journey to create a quality rubric. I did comparative and competitive review to see what & how other rubric builders worked. After some sketching, a longform pattern with constantly refreshing content emerged as both a well-suited and dev-feasible pattern for implementation.

As I sketched, I talked with the developers about feasibility and ran ideas for product micro features by our PM. We created a backlog of them which she maintained alongside other priorities for dev resource. We strove to maintain a good balance of the designers "simplify, simplify, simplify" mentality, with creative imagination about additional features that might be desirable and groundbreaking and helpful for teachers.

Because I was working so closely with developers, I was able to literally create a pdf of my photographed hand sketches to get them started, and we refined the designs together as they developed, building design/dev process as we needed it. Coming from a small startup culture where there was often not a lot of design resource, many of the engineers were used to having to come up with product design and ui solutions as they developed software functionality de-facto. This process of developing based on a set of wireframes and not just a list of requirements was new. As they implemented, we made minor adjustments to the design. And as functional buttons began to surface on the UI, I worked with a really smart front-end developer to tweak the layout with some styling, prioritizing desired changes for usability first with items like button size and placement.

It was incredible to see the transformation from early paper wires all the way through to working software embedded in a product. I really wanted to do more formal usability testing, but there was no budget or time built into the timeline. I did get permission to show the burgeoning UI to show early designs and staged implementation to teacher friends and got feedback from them informally as we iterated.

For existing features or areas of the software that were redesigned to accommodate new functionality, it was in a few cases an opportunity to make big changes to the UI for wider benefit simultaneously. At the same time, I needed to make sure that aspects of the screens that were working for users or part that were familiar and helpful didn't suffer inadvertent damage. We redesigned the screen where teachers go to grade any answer the computer couldn't score automatically (including but not limited to questions to be graded with a rubric) and took the opportunity to try and streamline this process. I worked closely with the Client Services team who had a nuanced understanding of the current functionality and common user needs and pain points.

Because I was working so closely with developers, I was able to literally create a pdf of my photographed hand sketches to get them started, and we refined the designs together as they developed, building design/dev process as we needed it. Coming from a small startup culture where there was often not a lot of design resource, many of the engineers were used to having to come up with product design and ui solutions as they developed software functionality de-facto. This process of developing based on a set of wireframes and not just a list of requirements was new. As they implemented, we made minor adjustments to the design. And as functional buttons began to surface on the UI, I worked with a really smart front-end developer to tweak the layout with some styling, prioritizing desired changes for usability first with items like button size and placement.

It was incredible to see the transformation from early paper wires all the way through to working software embedded in a product. I really wanted to do more formal usability testing, but there was no budget or time built into the timeline. I did get permission to show the burgeoning UI to show early designs and staged implementation to teacher friends and got feedback from them informally as we iterated.

For existing features or areas of the software that were redesigned to accommodate new functionality, it was in a few cases an opportunity to make big changes to the UI for wider benefit simultaneously. At the same time, I needed to make sure that aspects of the screens that were working for users or part that were familiar and helpful didn't suffer inadvertent damage. We redesigned the screen where teachers go to grade any answer the computer couldn't score automatically (including but not limited to questions to be graded with a rubric) and took the opportunity to try and streamline this process. I worked closely with the Client Services team who had a nuanced understanding of the current functionality and common user needs and pain points.

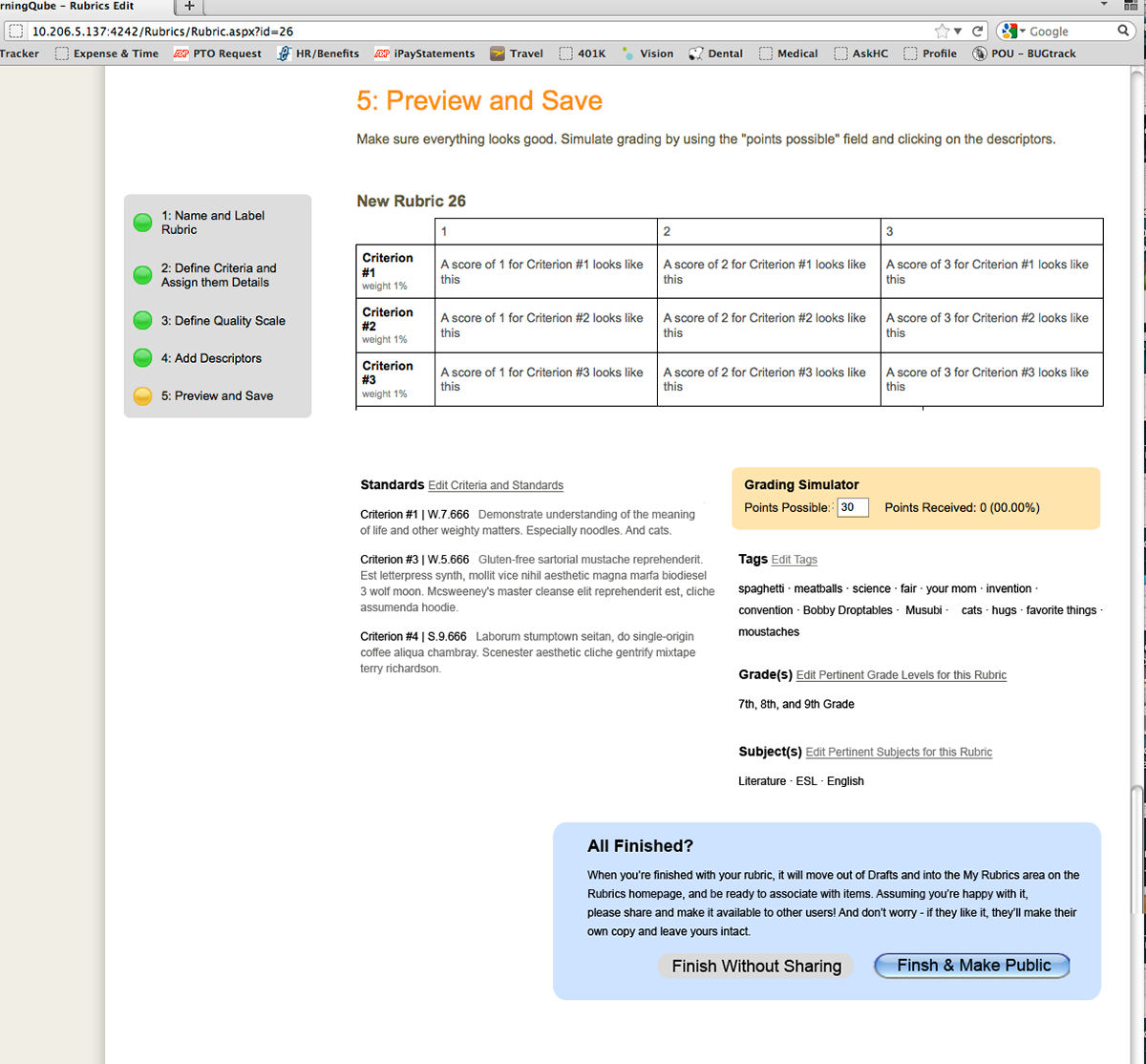

Screenshot: last step of Rubric Builder, as implemented by front-end dev wizard David Whitlark in ActivProgress. See more screenshots of the Rubric Builder here.

Adding a Rubric to a Question

Screenshot from a prototype demonstrating adjustments to an existing "question creation" page, wherein teachers designate a rubric as the means to grade that particular question or prompt. To download the actual Axure prototype, click here. To view and play with the actual prototype once downloaded, open the .zip file and open the "home.html" file with your browser.

Grading with a Rubric

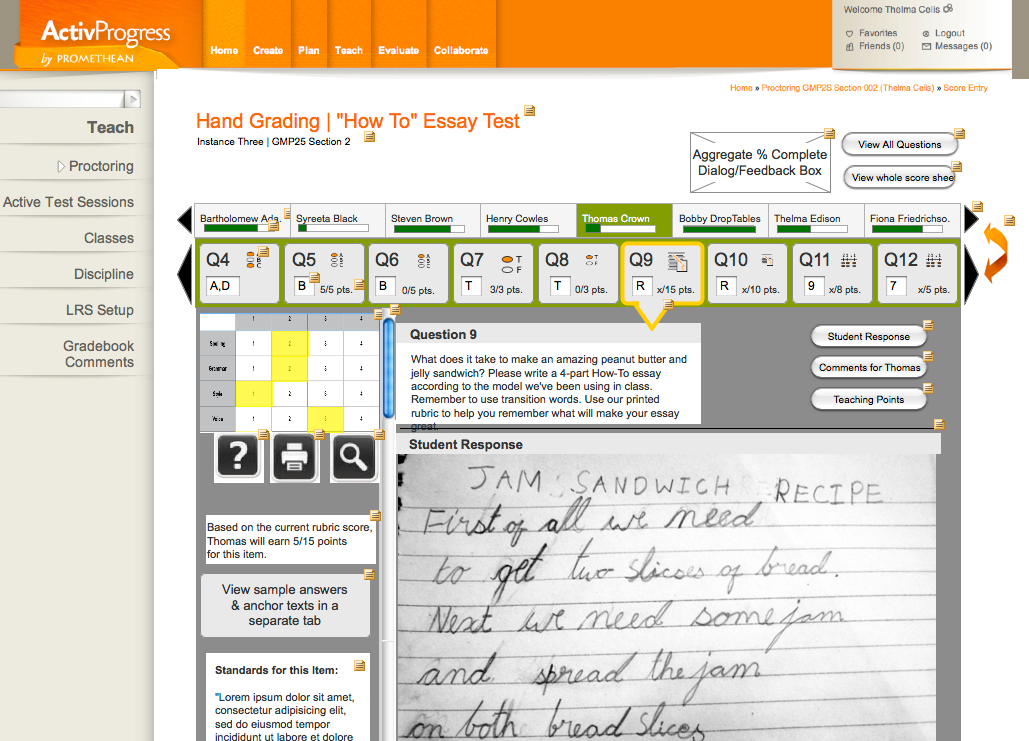

The Hand Scoring screen was designed for speed. If the process of getting to the benefit of viewing student progress were too removed or time-consuming, teachers would simply use the tool less. Conversely, its value proposition for stakeholders improves with frequent use. It lets teachers work through all of the questions for a single student's assessment in serial, or barrel through scoring one particular question across multiple children's assessments. It also shows the percentage completion for grading each child's assessment.

Screenshot from a prototype of the new hand grading screen, where teachers could quickly enter grades for any assessment questions that the system could not score itself; including but not limited to questions being scored with a rubric. The new view shows the question or prompt, student work sample(s), and the rubric being used to grading, all together for easy reference. It also suggests new functionality of pedagogical relevance to enhance feedback given to students, and help teachers leverage assessment results to modify their future teaching plan. To download the actual Axure prototype, click here. To view and play with the actual prototype once downloaded, open the .zip file and open the "home.html" file with your browser.

Viewing Assessment Data with Rubric Information Added In

Reporting objectives: Indicate that a rubric was used to grade a particular question, and show sub-scoring for each one. Thinking of teacher users getting the most out of the reporting functions in ActivProgress, we based the reports' focus on what we knew from our personas, imagining a teacher at home in the evening, looking over students' performance from the day, and making tweaks to tomorrow's lesson and future lesson plans. Delving into changes to reporting that would accommodate rubrics gave our team some leeway to (re)design other aspects of the platforms' display of student achievement data more generally.

My role was in determining how users should access reports, what they should show, and how they should show it, pitching ideas with product and development for feasibility. I researched interactivity needs for data visualization in general, starting with Edward Tufte and moving on to Stephen Few as well as online sources; did some competitive review of data visualization available via other providers. Simultaneously, the development team did some research on how the reporting engine could work. Originally the platform's reporting engine had been built in a way that made interactive data visualizations very difficult to implement, but once reporting was prioritized, the team looked to transition to the use of a JavaScript library called d3.js. Thus, the technical design constraints on types of graphs and their level of interactivity changed drastically over the course of the project.

In the end, given company reallocation of development resources, we were able to make modifications to some existing reports, but had to table the implementation of key new reports, such as the one below displaying longitudinal data.

In the end, given company reallocation of development resources, we were able to make modifications to some existing reports, but had to table the implementation of key new reports, such as the one below displaying longitudinal data.

Result

New rubric functionality was a smash hit with teachers and administrators across many school districts using ActivProgress. Use of the functionality increased considerably, and cries for time implementation of the new data visualizations to make fuller use of increased user input has grown ever-louder. Promethean sales associates now highlight rubric features, touting them as some of the neatest perks in the system, especially when pitching to clients for whom differentiated instruction and better support for special education and English Learners is important. To this end, I was even asked to help train the sales team alongside Promethean's product evangelist, on the importance and usability of the new features, as well as the pedagogical benefits and use of rubrics in general.