Background

Founded in 2016 and based in Minneapolis, MN, Inspectorio provides software solutions for supply chain management, focusing on quality, compliance, and delivery.

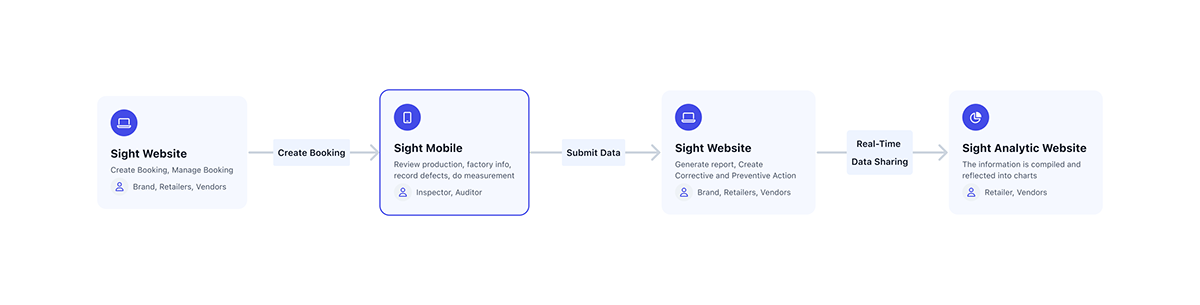

To explain the roles and features of platforms related to Inspectorio SIGHT, we have three main platforms as follows:

‣ SIGHT Web: This platform enables suppliers, retailers, and brands to create inspection orders. These orders include information about the factory, production processes, and product details such as quantity, color, and type. This is also where information is turned into reports and Corrective and Preventive Action Plans (CAPA).

‣ SIGHT Mobile: This application helps inspectors receive and manage the inspection orders assigned to them. They can view reports, capture information during inspections such as taking photos, filming, and measuring.

‣ SIGHT Analytic: The collected information is transformed into charts, reflecting production data and common defects, thereby proposing suitable production solutions for different industries.

The workflow includes:

‣ Retailers and brands create an order, including detailed information about the factory and the production process.

‣ This order is then assigned to inspectors according to their schedule. They visit the factory to conduct inspections, take measurements, and verify production information.

‣ After completion, they upload this information to the server in the form of PDF reports. These reports are then sent to suppliers, retailers, and brands. If there are any discrepancies during production, CAPA plans are implemented.

‣ From the synthesis of many reports, AI processes the information to provide an overall picture of a factory's or an entire enterprise's production capabilities, thereby offering assessments and production plans for the brands.

Research

In the early version of the Inspectorio mobile app called Sight, we received substantial user feedback related to the user experience and performance. This prompted a detailed research initiative aimed at identifying and addressing current user challenges effectively.

Reevaluation of Personas:

With a significant user base established, we undertook a reevaluation of our existing personas to ensure they accurately reflect the current needs and behaviors of our users. Here are the redesigned personas:

‣ Program Manager: Focused on overseeing project timelines and compliance.

‣ Merchandiser: Involved in selecting products and coordinating with suppliers.

‣ QC/QA Manager: Responsible for quality control and assurance standards.

‣ QC Auditor/Inspector: Conducts detailed on-site product inspections.

Reevaluation of User Journey:

We updated the existing user journey maps to include additional elements such as potential barriers and opportunities that could affect user interaction.

Research Methodology:

Utilizing the 3D framework, we selected the following methods to enhance our design approach:

Quantitative Research Overview:

Quantitative research in our project primarily employs closed-ended questions, allowing us to gather statistical and generalizable data. This method helps us understand how users feel about our app, although our sample size is relatively small, ranging from 20 to 50 participants due to the limited number of inspectors available. We've implemented several methods to optimize our research reach and depth:

1. Email Survey

We utilize SurveyGizmo to conduct surveys through emails sent to 45 inspectors across Vietnam, China, and South America. This approach helps us gather essential information on user habits such as platform usage frequency and overall user experience. It also helps identify any specific errors users encounter during setup and their general feedback on app usability.

2. Contextual Survey

We have integrated Walkme into our system from the start, which facilitates the collection of user feedback immediately after they complete a task or process. Although this tool is limited to web platforms, it is invaluable for gathering direct responses that help us understand user interactions during the pre-engagement phase and pinpoint any discrepancies.

3. Data Analytics

Alongside our user interviews, we continuously review data from AppSee, a user interaction recording platform. This tool provides us with detailed metrics on user interaction times for each step and screen, response dead zones, and heat maps of interaction points. We discovered some deployment issues with overlapping heat maps between screens, which we have reported to the relevant teams for resolution.

Qualitative Research Overview

This research approach is qualitative, following Nielman's guidelines we sample between 5-8 participants to ensure efficiency and to avoid redundant responses.

1. In-Depth Interviews:

We conduct remote interviews with 5 inspectors from China and South America. Our participants are aged 40-55, possessing deep industry experience and have used our app for an extended period. The open-ended questions are designed to delve into common issues encountered while using the app. We encourage participants to express their opinions and suggest features that would aid their work processes.

2. Contextual Inquiry:

After gathering feedback through interviews and surveys, we identified several insightful comments. However, some responses may be subjective and based on incomplete understanding of their workflows. Thus, we opted to observe the participants in their actual work environments. We arranged a meeting at a factory on the outskirts of Ho Chi Minh City, where they shared their daily work processes, preparation for inspections, and application usage, providing us with a clearer picture of the challenges they face.

3. Usability Testing:

We selected inspectors with varied lengths of app usage to gather more precise data. This group includes individuals with varying years of experience and lower weekly interaction hours with our app. We conducted usability tests at their offices to identify user experience improvements.

Research Insights:

Our research yielded several key insights:

‣ Vietnamese users are less likely to share their experiences compared to users from other countries.

‣ Users tend to share more emotionally charged and biased experiences during online interviews.

‣ Responses collected through email surveys often lack depth and focus, resulting in data that does not accurately reflect the app's quality.

‣ Observations of users interacting with the app in their work environment often reveal contradictions to their interview responses; users detail specific challenges when performing tasks sequentially, whereas during interviews, they tend to mention only the most memorable aspects.

‣ Some identified issues are not due to poor user experience but rather stem from inadequate education and onboarding processes, leading to misunderstandings about the app's functions.

‣ Users frequently fail to distinguish between user experience flaws and system issues such as slow response times, memory overload, and crashes.

‣ Some users insist on features that cater specifically to their personal needs.

‣ While some users are very cooperative, others are not.

Workshop Organization to Validate Assumptions:

Based on the data gathered during our research phase, we formulated hypotheses about potential solutions to address the issues identified. To verify the accuracy of these hypotheses, we organized a workshop and invited all the inspectors we were aware of, approximately 10 participants.

Card Sorting Exercise:

At the workshop, we employed sticky notes to list the problems identified during the research phase. We asked participants to arrange these notes according to their workflow sequence and to rate each issue by severity: critical, major, or minor, using colored sticky dots—red for critical, yellow for major, and green for minor. This helped us prioritize urgent problems and collaboratively brainstorm solutions. We also sought their expert opinions by asking them to sketch out solution ideas on a predefined template. This visual feedback helped us understand their perspective on solving the issues, enabling us to align proposed solutions with our system design effectively.

8. Research Outcomes

Critical Issues:

‣ The error recording screen interface is user-unfriendly and can easily confuse users.

‣ The system logic for recording measurements does not meet the inspectors' requirements.

‣ Inspectors often measure multiple items at once before recording, contrary to the current design of measuring one item at a time then recording.

‣ Users struggle to complete the questionnaire as they need to press the back button after each question to proceed to the next one.

‣ The interface is inconsistent and outdated compared to the company's new image and clientele.

‣ The app takes up too much storage space on phones.

‣ The app's performance is slow.

Major Issues:

‣ Users often forget to add measurement points before starting the measuring process.

‣ Users cannot perform multiple inspections at once and need a method to easily switch between inspections.

‣ The "Move to Fast" screen frequently appears when users transition through steps too quickly (to prevent inspectors from skipping steps in the process).

Minor Issues:

‣ Users are unclear which questions require accompanying images, often encountering errors when pressing the ‣ submit button, causing the app to report errors.

‣ After completing a question, users have to press the back button to start the next question.

‣ Users cannot arrange the measurement points in the order they desire.

Prepare

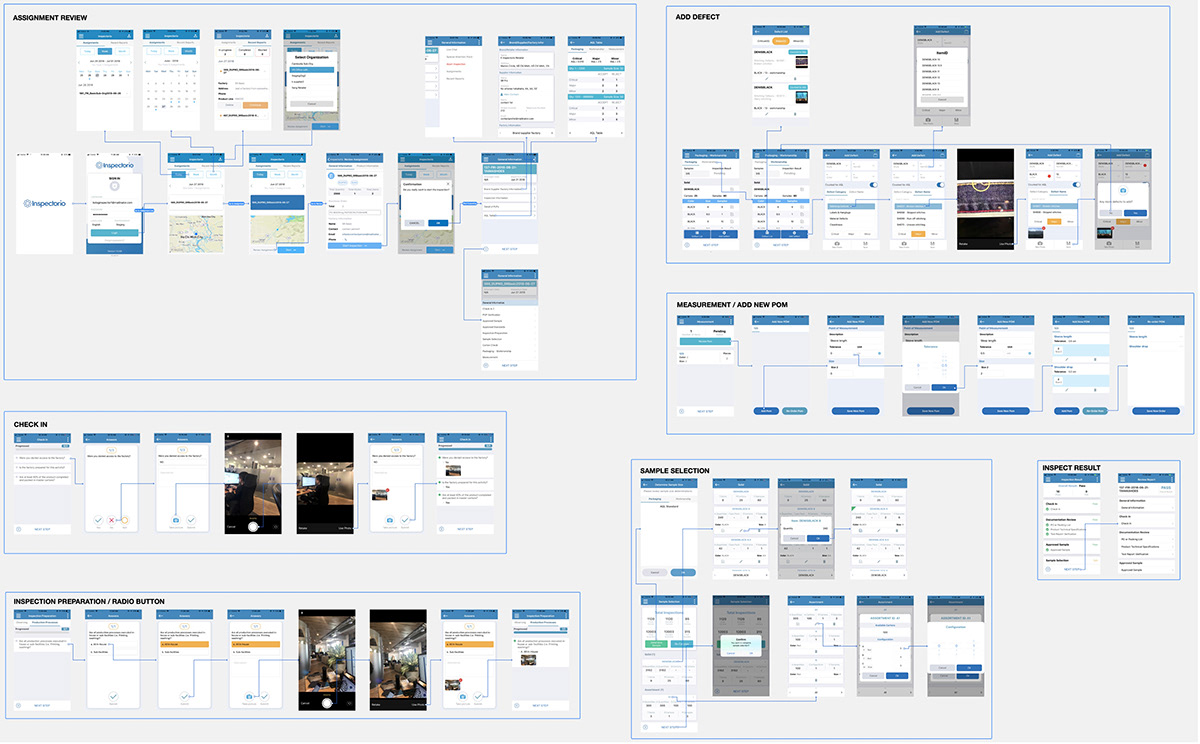

App Map

Before starting the application design, I decided to review the existing app map to identify any inconsistencies in the previous design. This allowed us to gain an overview and develop an appropriate strategy for the number of screens needed, from which we established a timeline for all stakeholders.

Branding & UI Kit

Before proceeding with the interface design, conveying the brand spirit and ensuring consistency across platforms is crucial. I thoroughly researched branding guidelines and chose dark blue to convey a fresh, dynamic, and user-friendly feel to new customers. I also refined the graphic components to make them rounder and softer, helping to reduce user stress at work. An initial basic UI Kit was set up and will later be developed into a complete design system.

Design

To facilitate monitoring and save time, I will present designs aimed at addressing the most critical issues first. Below are four severe issues listed and their solutions:

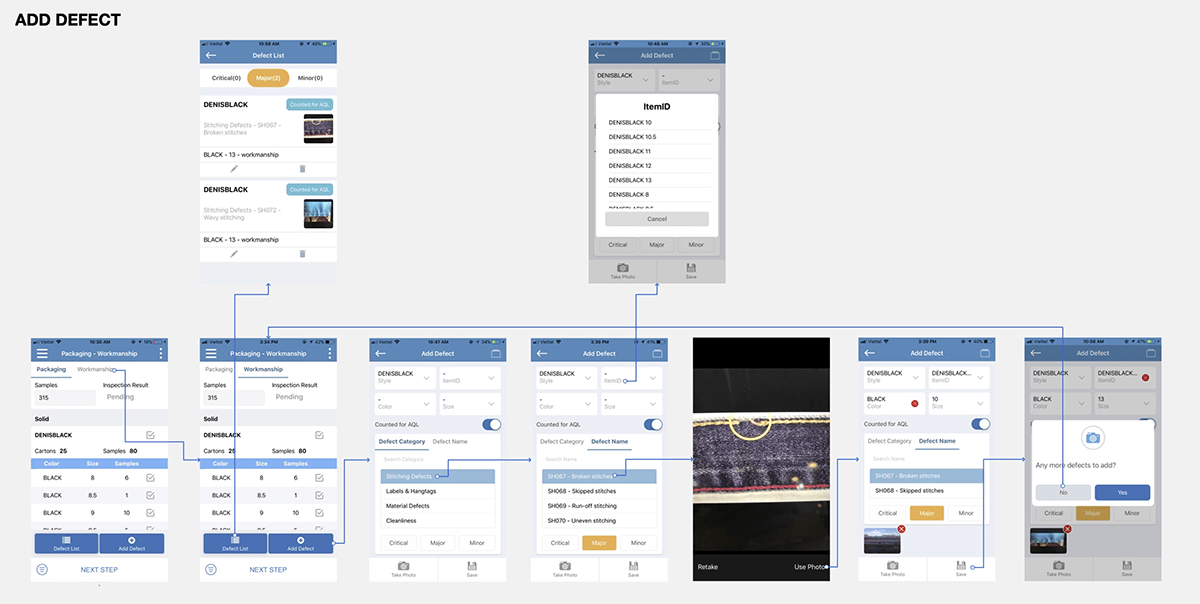

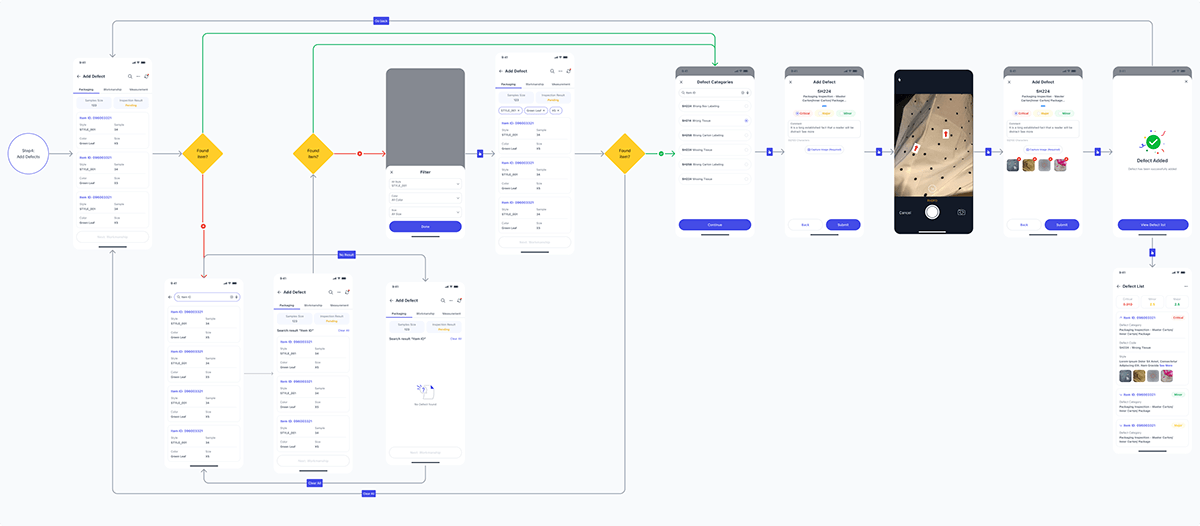

Error Recording Screen Interface: Unfriendly and Confusing for User

In the current interface, users must navigate through several cumbersome steps to add error information: selecting an item, then choosing the Item ID, followed by Size & Colors, selecting a defect category, and finally the defect list from a tab next to it. After all these steps, they can take a photo and save the information. This process is inefficient and can cause confusion due to too many buttons and options on the screen.

To improve, I have decided to divide this process into four separate steps, each focusing on a specific task, making the process of adding defects clearer and easier. Although this might increase processing time, I believe it is worth the effort as users can now easily identify the necessary steps. Additionally, they can filter items by Size, Style, or Color, which was difficult previously due to needing to remember the exact attributes of each item.

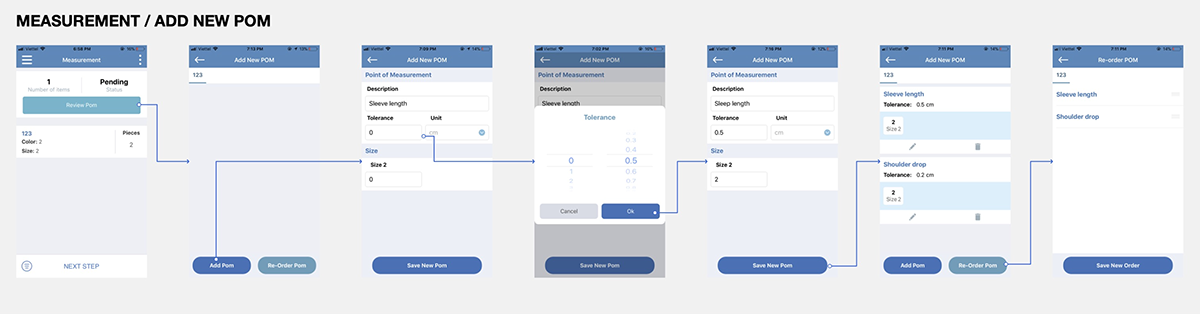

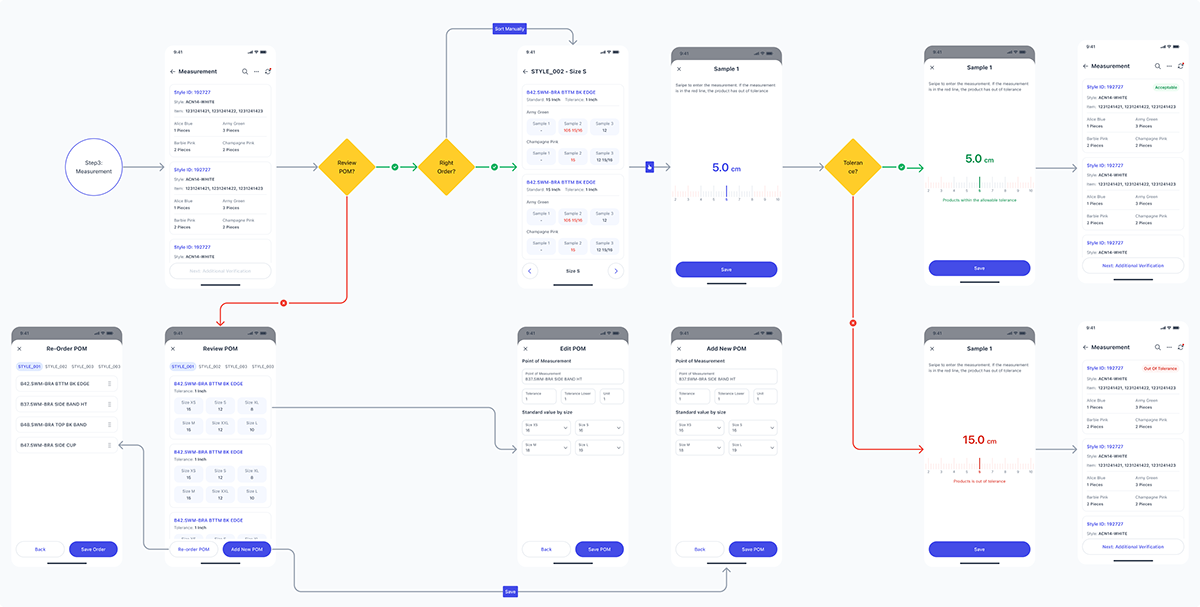

Measurement Method Issue:

In the current design, inspectors have to measure each item one by one and record it immediately, which does not suit their method of frequently measuring multiple items at once. To improve, I have developed a virtual ruler that allows them to slide to select measurements, with two distinct color zones: green for acceptable error margins and red for unacceptable ones. This saves them time and avoids re-entering data.

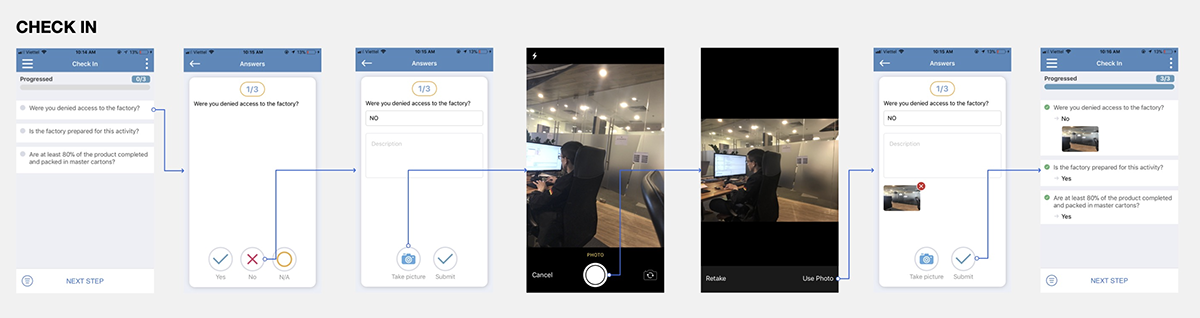

Improving the Questionnaire Completion Process

Currently, users struggle because they have to press the back button after each question. To address this, we will design the interface in a card format, allowing users to swipe left or right to move to the next question. Additionally, the photo capture screen will automatically display for questions that require an image, and the system will automatically advance to the next question after the user responds, saving time and improving user experience.

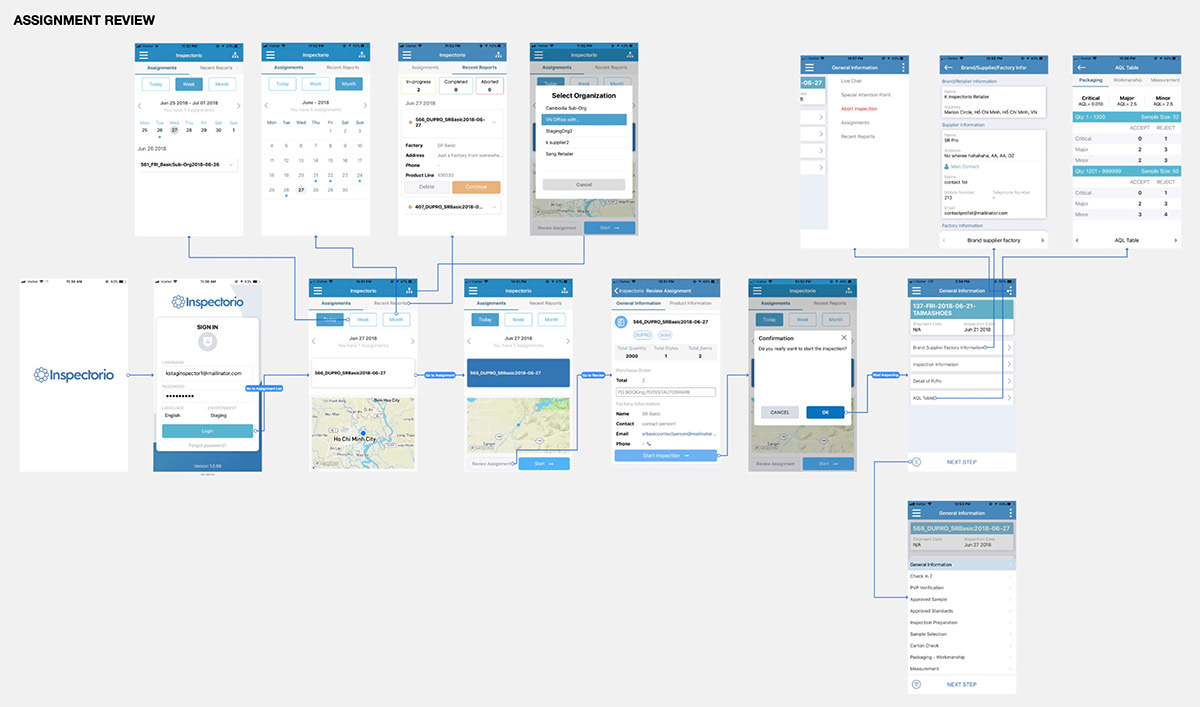

Assessment and Improvement of Task Assignment Process

In an effort not only to address current issues but also to enhance the user experience across different screens, I have revisited the task assignment process to identify ways to improve:

‣ Redesigning the Calendar: I have redesigned the calendar to allow users to easily scroll up or down and view all the days of the month. This enables them to quickly identify tasks that have been assigned and plan their work efficiently. Users can switch between days quickly, enhancing time management and work efficiency.

‣ Improving the Design of Inspection Cards: The inspection cards have been redesigned to be more intuitive, with information arranged logically, completely, and neatly. A new feature allows users to collapse the cards to save screen space, helping them manage multiple tasks more easily within a single interface.

‣ Redesigning the Assignment Detail Page: This page has been improved with a clearly designed and easy-to-view map. Information related to assigned tasks has been reorganized into a new, modern, and more intuitive layout. Users can quickly view essential information before starting a task, which helps minimize errors and save preparation time.

Usability Testing

After completing the designs, I will integrate them into a prototype board that closely resembles the actual application for users to experience and provide feedback

Test Plan

‣ Prepare the Script: I will prepare a list of questions to ask users during their interaction with the prototype. This helps control the testing process and prevents random user interactions that could lead to serious errors.

Participant Selection: We will choose users based on their familiarity with the previous application to ensure more accurate feedback.

‣ Focus Group Method: Each test group will have a person to read the questions, record audio, video, and take notes on responses.

‣ For Remote Users: We will use software to record facial expressions and screen interactions, helping us better understand their emotions during the experience.

Test Objectives:

The purpose of Usability Testing is to verify if our solution fits the users' workflow and to gather the most valuable feedback.

Test Results

Some users treated the test as a mandatory task and did not offer much feedback.

Others just wanted us to listen to their opinions, showing little interest in the content we presented.

Some were very enthusiastic about contributing feedback, providing detailed explanations of what they liked and disliked, thus helping us gain a comprehensive view and make suitable adjustments to the application.

Summary

Redesigning the app was a challenging task, mainly because we didn't have a lot of time and there weren't many references out there since not many competitors were around. Being trailblazers means taking some risks, right?

We put our all into it and learned from what we went through, using what we knew to get the best results as fast as we could. We're still pushing for recognition and always working on the design and making it better, so our app can help as many people as possible and be the best it can be.